The future of human-robot partnerships is already here and it’s rapidly becoming more widely distributed. Few people now see anything remarkable about interacting with disembodied AI, such as Siri, Alexa and Google Assistant.

Soon, it’s likely human workers will be working alongside embodied AI. That is, machines that sci-fi types refer to as androids, automatons, cyborgs, droids or humanoids, and academics call social robots.

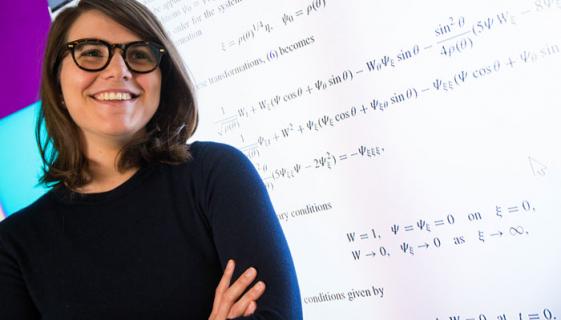

Dr Sarah Bankins is a Senior Lecturer at Macquarie Business School. She is one of a growing number of academics building on research into the potential form and consequences of human-robot relationships in the workplace.

Along with her co-author Dr Paul Formosa, an Associate Professor at Macquarie University’s Department of Philosophy, Bankins recently published a paper “exploring the implications of workplace social robots and a human-robot psychological contract”.

Robot-human relationships 101

A robot is any programmable machine that can carry out a task and workers have been interacting with unsophisticated workplace robots for many years.

While they might have cursed, cajoled or pleaded with such robots, workers haven’t usually had anything resembling a relationship with them. But more recently, machines designed to look and act like humans have been coming on the market.

“It’s still early days but not as early as many believe,” Bankins says.

“In Japan, there are robot nurse’s assistants. Elsewhere in Asia, robots are popping up in banks and shopping centres and offering customers assistance via their torso touch screens. The more advanced ones, such as Pepper, can remember faces, recognise human emotions and engage in conversations.”

Soldiers would welcome these robots back as heroes when they returned after being repaired. They gave them elaborate funerals if they were blown up in combat.

Bankins points out that as machines increasingly resemble humans, and start interacting with humans on a day-to-day basis, people will be inclined to start treating them like sentient beings.

“There’s some fascinating research detailing the relationship soldiers form with the robots they use to detonate land mines,” Bankins says.

“Soldiers would welcome these robots back as heroes when they returned after being repaired. They gave them elaborate funerals if they were blown up in combat. They could even be awarded medals. The soldiers believed they had a relationship involving reciprocal obligations with their robots – "They do something for me, I do something for them in return.’”

Psychological contracting in the absence of a psyche

A psychological contract is a set of unspoken, but important, expectations about what two or more individuals owe each other. For example, an employee may work hard and expect their manager to promote them in return.

Historically, psychological contracts have been examined in the context of humans possessing free will. For instance, in the above example, the employee can generally choose whether to work hard. Their manager can also choose whether to promote them. Both parties realise the other party has the discretion to act as they deem appropriate.

What intrigues Bankins, who comes from an HR background, is how humans will form psychological contracts with their robot colleagues, particularly those powered by AI and machine learning.

These robots can think and learn on their own and adapt to their surroundings, including the humans with whom they interact.

“Because we focus on robots that are powered by AI, and are minimally programmed, they learn on their own. This adds some real complexity to how much we can understand, and how and why they behave as they do,” Bankins says.

“This also means they should be able to adapt really well to us as humans – hence the potential issue of becoming almost ‘too perfect’ workmates.”

Given such advanced social robots are still a rarity, there’s little empirical evidence available on their impact in workplaces. So, Bankins and Formosa conducted a thought experiment to investigate how robot-human workplace relationships are likely to play out.

Andromet and Ashley’s synthetic relationship

“The limitations of thought experiments are self-evident but when contemplating the future they can be useful,” Bankins says. “That’s why scientists such as Galileo, Newton and Einstein conducted them and why they are widely used in fields like philosophy.”

After taking into account existing and emerging technology, Bankins and Formosa created Andromet. Andromet is a social robot capable of conversing with a co-worker and identifying and responding to their emotional state. Andromet was partnered with Ashley, a well-educated, intelligent and ambitious human consultant.

Bankins and Formosa spent many hours playing out different scenarios and imagining how the relationship between Andromet and Ashley could develop.

“The main thing to emerge was how lopsided the relationship could inevitably become,” Bankins says. “To some extent, Ashley will relate to Andromet as if he is human. But she will be regularly reminded he is a machine. One without feelings that can be hurt or interests outside of the workplace.

Some researchers suggest constant connection to technology can diminish adults’ attention and empathy – those uniquely human skills that differentiate us from robots.

“Andromet and Ashley will have a relationship, but it will be what we called a ‘synthetic relationship’. That is, an unnatural relationship where Ashley can give very little while taking a great deal.

“The psychological contract Ashley will have with Andromet will be something like, ‘I’ll occasionally check that you’re functioning properly. In return, you’ll placate me when I’m irritable, cheer me up when I'm sad, reassure me when I’m nervous, organise my schedule faultlessly and process data to deliver insights that make me look good in front of clients.”

The potential problem with synthetic relationships – at least for the foreseeable future – is not that robots like Andromet will become self-aware then run amok. It’s that people like Ashley will either withdraw from their human colleagues, or start treating them like robots, or some combination of both.

Will robots train us to become less human?

“There’s already concern about technology making us less emotionally intelligent,” Bankins says.

“Parents worry their children are finding it more difficult to engage in face-to-face communication as a result of the prevalence of texting, messaging and email. And it’s not only the impacts on children; some researchers suggest constant connection to technology can diminish adults’ attention and empathy – those uniquely human skills that differentiate us from robots.

“After conducting our thought experiment, Dr Formosa and I raise the prospect of human workers with access to an unflaggingly solicitous robo-colleague not wanting to invest the time and effort required to maintain good relationships with their flesh-and-blood co-workers.

“It all comes down to questions of design,” Bankins continues. “What do we think it’s appropriate for social robots to do? What do we not want them to do? These are issues many international organisations are now wrestling with.”

Ultimately, social robots may have to be designed to behave like flawed humans to ensure humans aren’t lulled into one-sided relationships with them.

“Do we want robo-colleagues that meet all our needs and ask little in return? Or ones that nudge us to be considerate? With human-robot workplace partnerships becoming more commonplace, including the use of social robots, the leaders of organisations have to start thinking about these questions.”

Dr Sarah Bankins is a Senior Lecturer in the Department of Management at Macquarie Business School.

Dr Paul Formosa is an Associate Professor at Macquarie University’s Department of Philosophy